Have you played the game of “20 Questions” where you try to guess an object by asking yes-or-no questions?

For example, you try to guess an animal that your friend has in their mind, by asking a series of 20 yes-or-no questions?

"Did you know that “decision trees” is as intuitive as playing this game of “20 Questions”?

Just like asking 'Is it a mammal?' can eliminate entire categories of animals in a heartbeat, decision trees work by asking the right questions to slice through data chaos and deliver clarity.

Let’s break them down “Decision trees” into simple, easy-to-understand chunks via the following topics

Key-Takeaways:

What Exactly Is a Decision Tree?

- Decision trees mimic how humans make decisions by asking broad, impactful questions first, then getting more specific.

- They resemble a flowchart or a “Choose Your Own Adventure” book for data-driven decisions.

How Does the Decision Tree Algorithm Work?

- Decision trees predict outcomes by splitting data based on features, like “Is the fruit round?”

- Each split narrows possibilities until reaching a final prediction at the “leaf” nodes.

Real-Life Examples and Applications

- Used in fields like healthcare, marketing, and finance to simplify complex decisions.

- Example: A doctor diagnosing patients or a bank evaluating loan eligibility.

2 Types of Decision Trees

- Classification Trees: Sort items into categories (e.g., species classification).

- Regression Trees: Predict numerical values (e.g., estimating house prices).

What Is the Most Popular Decision Tree?

- CART (Classification and Regression Trees) is widely used for its versatility and ability to prevent overfitting.

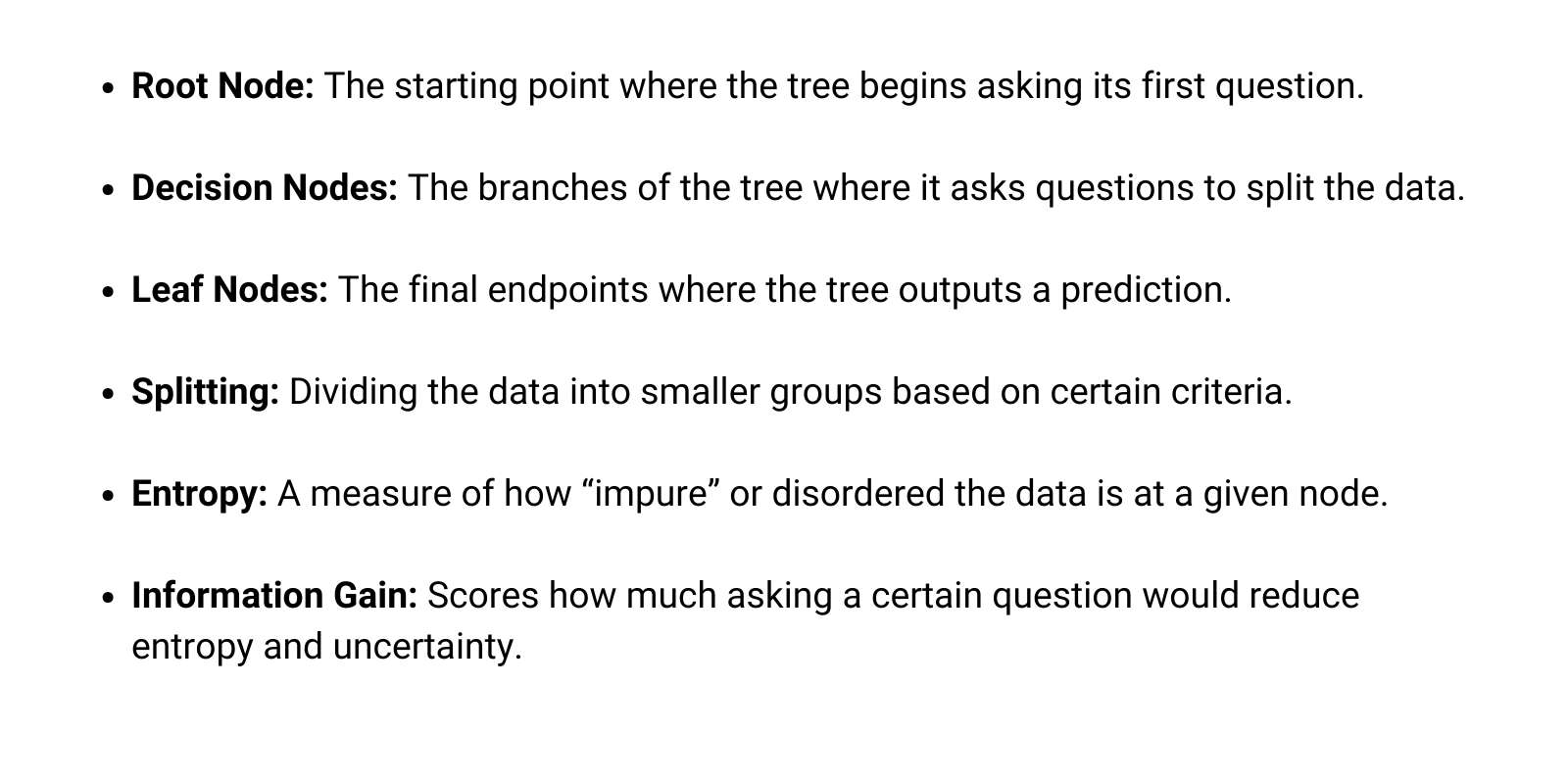

Decision Tree Terminologies

- Key terms include root nodes, decision nodes, leaf nodes, entropy, and information gain.

- These concepts guide how decision trees structure and refine predictions.

Information Gain: How To Choose the Best Attribute at Each Node

- Decision trees prioritize questions with high information gain to split data efficiently.

- This process reduces uncertainty and creates purer data subsets.

How Do Decision Trees Use Entropy?

- Entropy measures data impurity, aiming for splits that create distinct, predictable groups.

- Lower entropy indicates a cleaner, more organized dataset.

Gini Impurity or Index

- Similar to entropy, Gini impurity evaluates data disorder at each split.

- The goal is to create pure data splits with minimal mixed elements.

When To Stop Splitting?

- Decision trees stop splitting when further division doesn’t improve predictions or when data becomes fully classified.

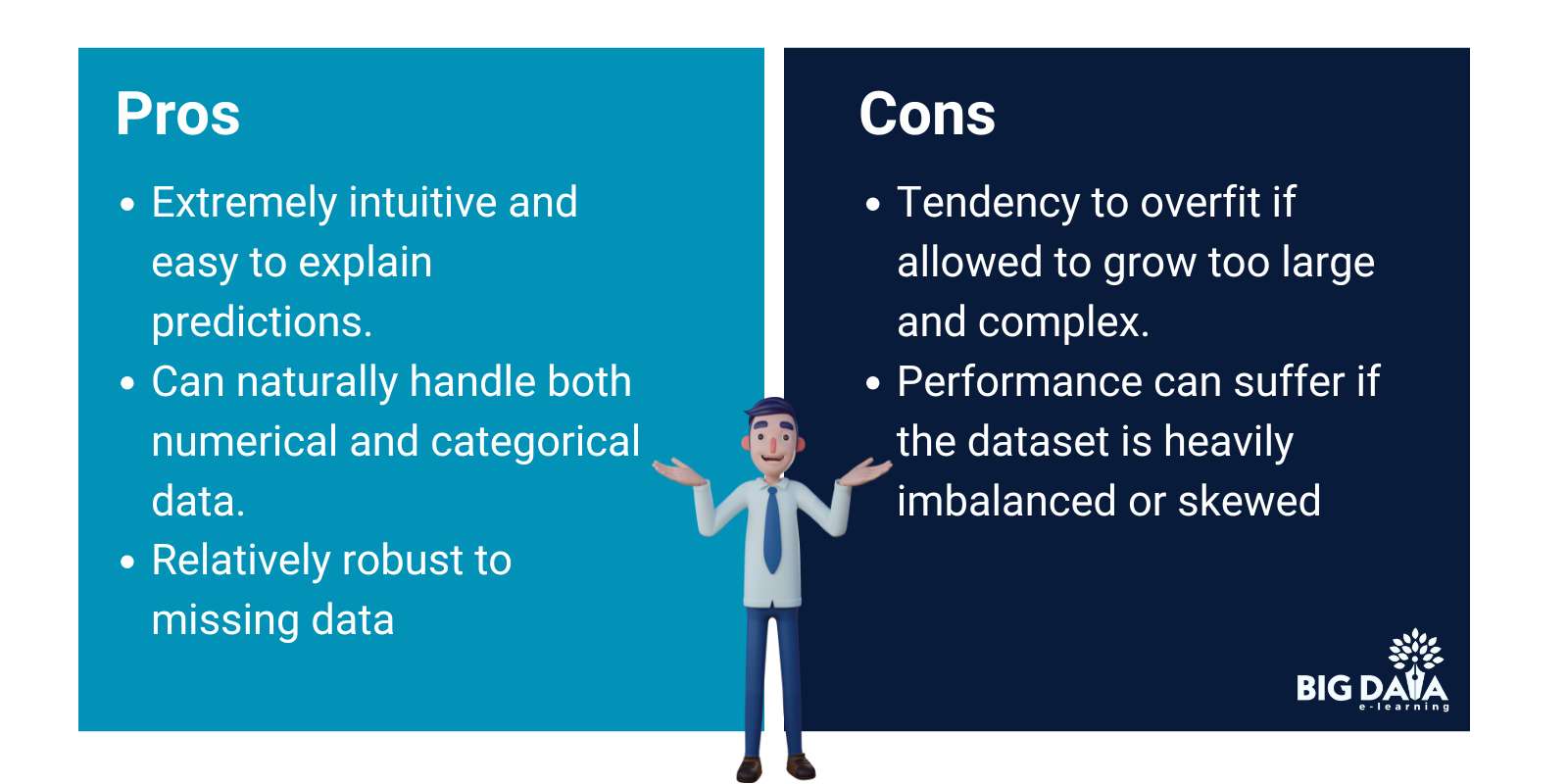

Advantages and Disadvantages of the Decision Tree

- Advantages: Easy to interpret, handles mixed data types, and is robust to missing data.

- Disadvantages: Can overfit large datasets and struggle with imbalanced data.

Sounds good? Let’s get started!

What Exactly Is a Decision Tree?

Imagine you’re a little kid trying to guess your friend’s favorite animal.

What’s the first question you’d ask to start narrowing it down?

You’d probably go with something broad like, “Is it a mammal?” rather than asking, “Is it a cat?” right off the bat, right?

By posing that initial, wide-ranging question, you’ve already eliminated a huge chunk of non-mammal animals from the realm of possibilities.

Smart thinking!

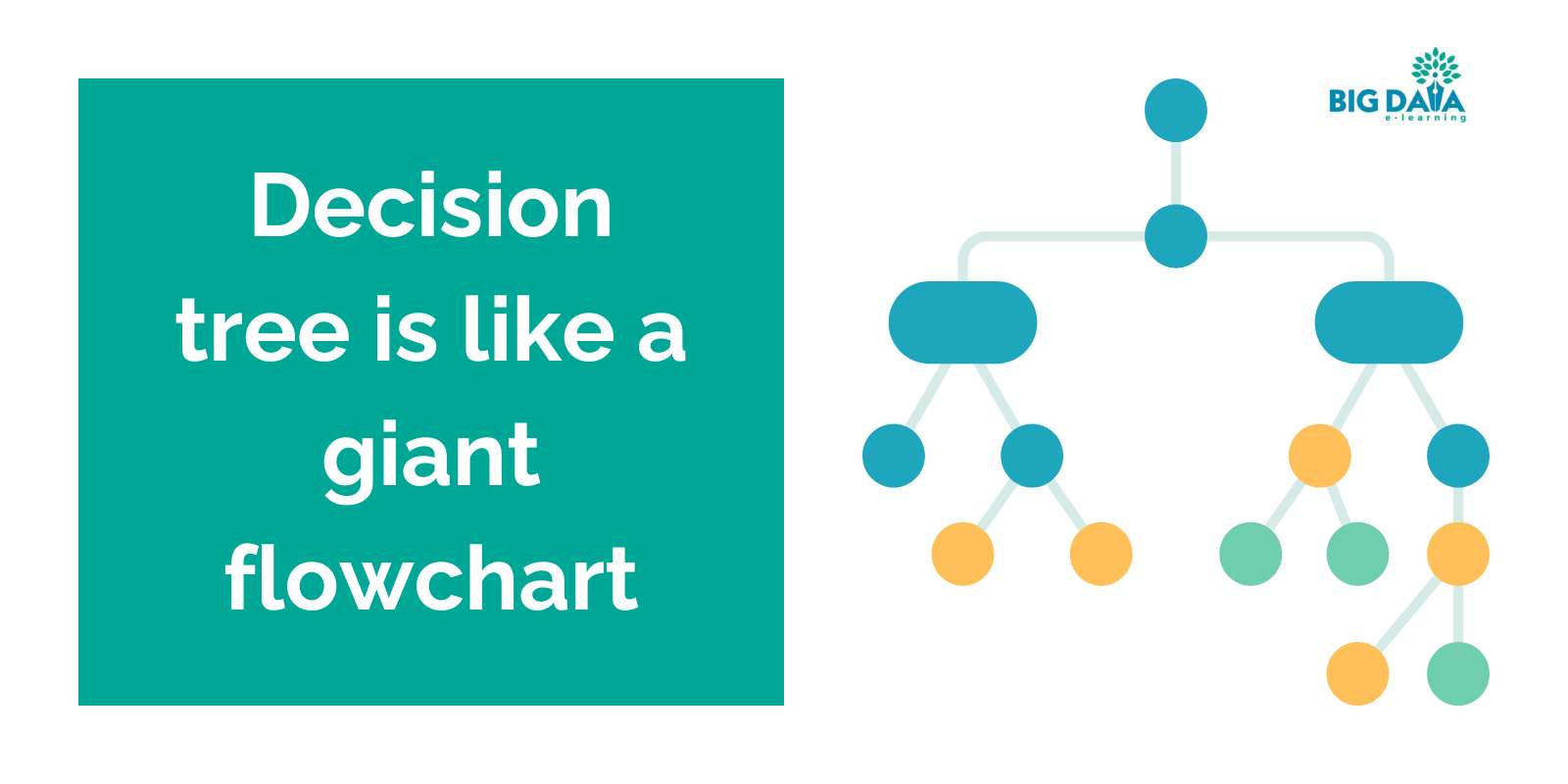

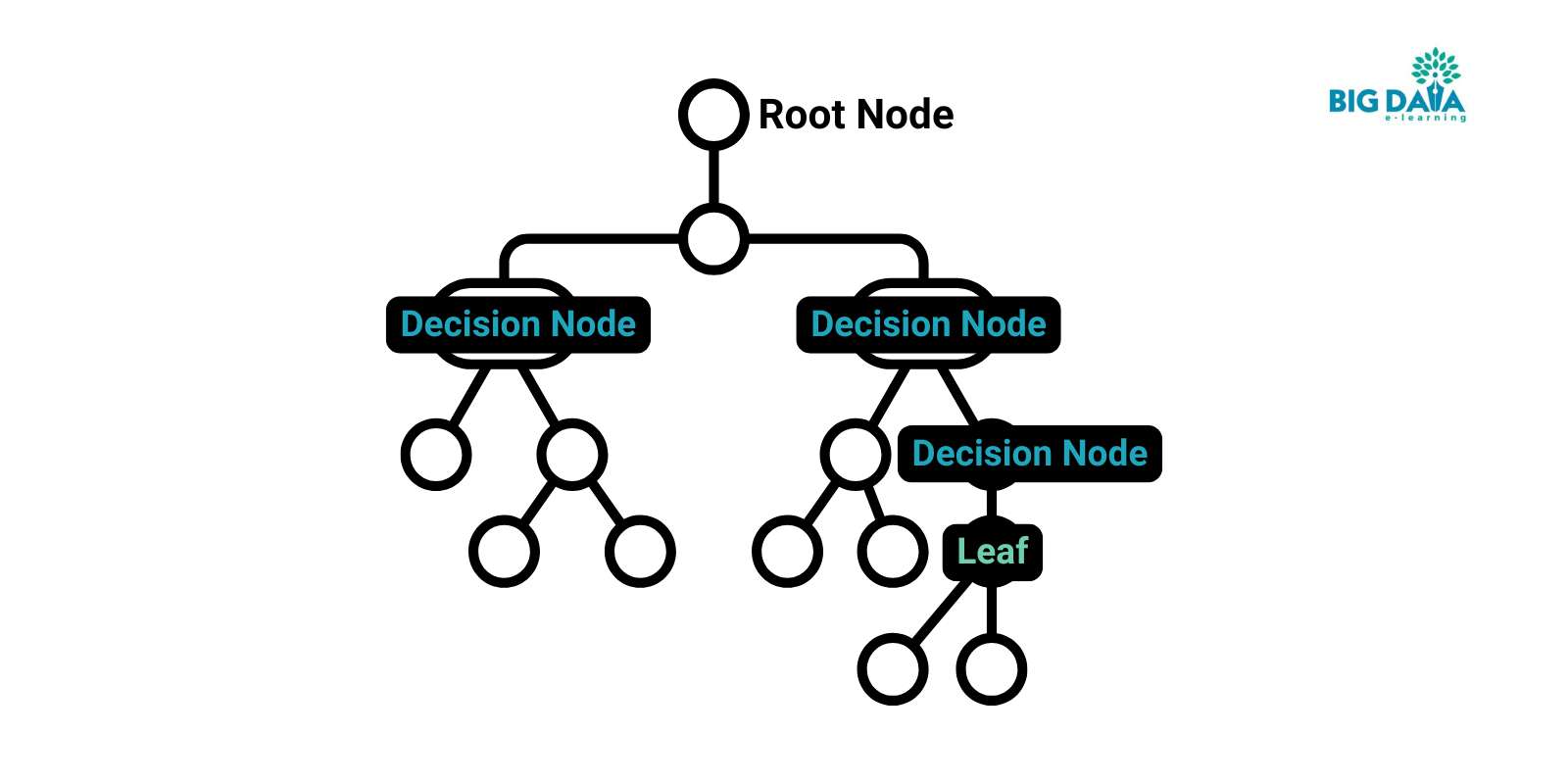

Well, that’s essentially how decision trees work in a nutshell.

They start by asking the big, broad questions that split the data into distinct groups.

Then they follow up with increasingly specific queries on each “branch” until they reach a final prediction at the “leaf” nodes.

It’s like a giant flowchart or a “Choose Your Own Adventure” book but for making data-driven decisions.

Stick with me here because…

now we’re going to break down exactly how decision trees turn questions into predictions with an example that’s as simple as sorting fruit

How Does the Decision Tree Algorithm Work?

For a real-world example, let’s say you’re building a decision tree to predict whether a fruit is an apple or an orange based on its:

- Color

- Size

- Texture

The tree might first ask “Is the fruit round?”

- If yes, it moves to “Is it red or green?”

- If red, it predicts “apple.”

- If green, it asks about texture, and so on until reaching a final prediction.

See, not so tough!

Let’s shift gears and see how decision trees work their magic in the real world..

Real-Life Examples and Applications

Decision trees are used all over the place in the real world — from health care to marketing to finance.

Imagine a doctor trying to diagnose a patient based on their symptoms.

They could use a decision tree flowchart that starts by asking about key symptoms like fever or fatigue.

Each branch leads to more specific follow-up questions until a final diagnosis is reached.

Or let’s say a bank wants to predict whether someone is a good candidate for a loan.

Their decision tree might kick things off by asking about the applicant’s annual income.

- If it’s below a certain threshold, it could then evaluate their credit score and outstanding debts.

- If their income is high, it may look at factors like their employment history instead.

The applications are endless!

Decision trees provide an intuitive way to make sense of complex decision-making processes involving multiple factors.

Here’s where it gets interesting:

Did you know that decision trees come in two distinct flavors depending on what you’re predicting?

2 Types of Decision Trees

There are two main flavors of decision trees you should know:

- Classification Trees: These are used when you’re trying to sort items into distinct categories or classes — like if you wanted to classify different species of animals based on their characteristics.

- Regression Trees: These come in handy when you need to predict a numerical value rather than a category. For example, estimating a house’s market price based on its size, location, etc.

Guess what happened when researchers wanted a ‘versatile’ decision tree that could tackle almost any task?

This decision tree emerged as a frontrunner for some very good reasons…

Keep reading to find out which one..

What Is the Most Popular Decision Tree?

While there’s no single “most popular” decision tree algorithm, one strong contender is CART (Classification and Regression Trees).

A few key reasons CART is so widely used:

- Versatility: It handles both classification (sorting into categories) and regression (predicting values) tasks with ease.

- Pruning Power: CART can automatically “prune” or get rid of unnecessary branches that don’t actually improve the model’s accuracy. This prevents overfitting.

Missing Data: It has strategies to still make predictions even if some data is missing.

But at the end of the day, there’s no universal “best” decision tree — it depends on the specific problem you’re solving for.

Let’s pause for a quick vocabulary session to ensure we’re all on the same page.

Following are the breakdown of the essential terms you’ll need to understand decision trees better.

Decision Tree Terminologies

While there’s no single “most popular” decision tree algorithm, one strong contender is CART (Classification and Regression Trees).

A few key reasons CART is so widely used:

Let’s quickly go over some key decision tree vocabulary:

Simple enough, right?

Let’s dive deeper more into a couple of those last two terms…

Have you ever wondered how decision trees decide which question to ask first?

The answer lies in a powerful concept called ‘information gain’.

Information Gain: How To Choose the Best Attribute at Each Node

The key to any successful decision tree is asking the right questions at each node to split the data into its purest, most distinct groups from the get-go.

And that’s where information gain comes in!

Remember that classic game 20 Questions, where you try to guess an object by asking yes-or-no questions?

Some queries are way more useful for narrowing things down than others.

Like asking “Is it an animal?” gives you way more information than asking, “Does it have four legs?”

Higher ‘information gain’ means a better, more efficient split.

So decision trees prioritize splitting the data on features with the highest ‘information gain’ first — just like how you ask the most differentiating questions early on in 20 Questions.

It’s all about reducing disorder as quickly as possible!

But wait, how does information gain calculate uncertainty?

It all boils down to entropy, and..

I promise it’s easier to grasp than it sounds.

How Do Decision Trees Use Entropy?

Okay, but how exactly does information gain calculate that “disorder” or uncertainty it’s trying to reduce?

Well, that’s where entropy comes into play.

Entropy measures the “impurity” of the data at a given node.

Let’s use a candies analogy to illustrate:

Imagine you have a bag of mixed candies with an unpredictable, even blend of chocolates, hard candies, gummies, etc.

- Reaching in blindly, you have no idea what you’ll grab — that’s an example of high entropy since everything is completely jumbled up.

- But if the bag only contained chocolate bars, that would be very low entropy since it’s super predictable.

Decision trees want to reduce entropy as much as possible by splitting the data into its purest, most unmixed groups from the start.

So they prioritize asking questions (with high information gain) that divide everything into low-entropy “bags” ASAP.

But wait, there’s more…

Is Entropy the only way to measure disorder?

Let’s explore another approach called ‘Gini impurity’, which works like sorting fruit baskets.

Gini Impurity or Index

In addition to entropy, Gini impurity is another way that decision trees can evaluate the disorder or impurity at each node.

Let’s use an analogy to wrap our brains around it:

Imagine you’re sorting different types of fruit into baskets at a grocery store.

You want each basket to only contain one pure, unmixed fruit type — not a jumbled mess of apples, oranges, and bananas.

The baskets with just one fruit type have low Gini impurity, while the mixed-up baskets have higher impurity scores.

The goal is to get everything into perfect single-fruit-type “baskets” as quickly as possible through iterative splitting.

Every tree needs to stop growing at some point.

So how do decision trees know when enough is enough?

When To Stop Splitting?

Circling back to our 20 Questions comparison, at some point you have to actually stop asking questions once you’re reasonably certain what the object is, right?

You don’t want to just infinitely keep guessing.

Similarly, decision trees use a couple of stopping criteria to determine when to stop splitting data any further:

- When all remaining samples belong to the same target class (e.g., they’re all apples)

- When splitting further won’t actually increase the model’s predictive performance

The goal is finding the right balance between splitting enough to make accurate predictions — but not splitting too much and overfitting to the quirks of the training data.

Once the model hits diminishing returns on splitting, it’s time to stop!

So, with all this in mind, why are decision trees so popular, and what are their limitations?

Let’s wrap it up with the pros and cons.

Advantages and Disadvantages of the Decision Tree

So those are the key principles behind how decision trees operate! But like any model, they have their own pros and cons to consider:

Advantages

- Extremely intuitive and easy to explain predictions. (“It’s an apple because it was round and red.”)

- Can naturally handle both numerical and categorical data.

- Relatively robust to missing data compared to other algorithms.

Disadvantages

- Tendency to overfit if allowed to grow too large and complex.

- Performance can suffer if the dataset is heavily imbalanced or skewed.

At the end of the day, decision trees trade a bit of predictive power for awesome interpretability.

You can quite literally see exactly how they make decisions!

Conclusion

There you have it — all the core decision tree concepts demystified and broken down into simple, easy-to-follow language using lots of real-world examples and analogies.

We covered:

- What decision trees actually are

- How they “think” using entropy and information gain

- Key terminology like Gini impurity

- The criteria for when to stop splitting data into more branches

The next time an algorithm seems daunting, just remember that anything can be made intuitive by putting it into terms you can wrap your head around.

So don’t be intimidated, keep asking questions, and most importantly — have fun with the learning process!

You’ve got this.

Stay connected with weekly strategy emails!

Join our mailing list & be the first to receive blogs like this to your inbox & much more.

Don't worry, your information will not be shared.